TL;DR;

Faster I/O! Faster PostgreSQL! Faster ETL! Bad graphics!

What’s going on?

PostgreSQL v18 will feature a new higher-performance I/O system that relies on asynchronous disk access. But it’s no secret, the underlying mechanism is available to us too.

If you’re involved in high-performance data-processing, read on.

This rings a bell.

Regular blog readers will know about our recent contrition when it comes to speeding up I/O. Non-readers can catch up here, but for the click-averse we’ve been stitching together asynchronous streams of data across multiple machines on the network to increase throughput, rather than focusing on the smash-it-into-a-wall-at-200mph approach of multi-threading. In our network-scanning Rust project the time taken was decreased by 230x.

The engineers working on the next version of PostgreSQL[1] have been working on their own version. Instead of each read waiting for the previous one to complete before continuing, the reads will be fired off in unison and the results assembled as they return. PostgreSQL’s engineers quote a 2-3x speed improvement.

Before

After

How have they done it?

The new feature harnesses a native async I/O system in the Linux kernel called io_uring. When io_uring isn’t available (such as non-Linux systems, old kernels or where it’s been deliberately disabled), then the PG system will replicate the functionality by adding in a code shim. It’s not as fast as using io_uring of course, but faster than before.

Thank you, Meta.

That’s not a sentence that’s written often. The sound that accompanies the typing of that sentence was of the author’s gnashing teeth.

Meta’s Jens Axboe[2] created io_uring because traditional I/O requires multiple syscalls (read(), write(), poll(), etc.), which introduce expensive CPU context switches. A context switch is where the current process has its state saved so that the CPU can switch to another process. Upon return, it loads the old process details and carries on.

io_uring reduces the number of syscalls by batching multiple I/O operations into a submission queue and passing these over in one-go to kernel space. Its use of queues removes the kernel polling overheads. This brings significant speed improvements. Seasoned DB migrationeers will recognise the parallels (albeit at a higher level) with bulk-loading data into a database for performance gains.

Contrived analogy

Think of it as having a passport for a school bus, rather than for each child. Instead of each child having their passport checked, the whole bus passes through on a school passport. You can imagine how much faster this would be!

How can we use it?

io_uring can be access natively on Linux systems in Rust, C, C++ and Zig. (Other higher-level languages would required some bindings.)

Here’s an io_uring example in Rust (this is indicative as it was generated by a local LLM).

// Here's some code

use io_uring::{opcode, CirQueue, SubmissionQueue, CompletionQueue};

use std::fs::File;

use std::os::unix::prelude::AsRawFd;

use tokio::runtime::Runtime;

fn main() {

let ring = CirQueue::new(8).unwrap();

let sq = SubmissionQueue::from_raw_fd(&ring, 0).unwrap();

let cq = CompletionQueue::from_raw_fd(&ring, 0).unwrap();

// Open a file for reading and writing

let mut file = File::open("example.txt").expect("Failed to open file");

// Create an `io_uring` operation

let read_op = opcode::Read {

buf: 4096,

fd: file.as_raw_fd(),

offset: 0,

};

// Submit the operation

sq.push(&read_op, 1).unwrap();

// Wait for completion

let cqe = cq.wait().unwrap();

assert_eq!(cqe.result(), Ok(4096));

// Handle the result (for demonstration purposes, we just print it)

let mut buffer = vec![0u8; 4096];

file.read_exact(&mut buffer).unwrap();

println!("Read content: {}", std::str::from_utf8(&buffer).unwrap());

}

Do I have io_uring?

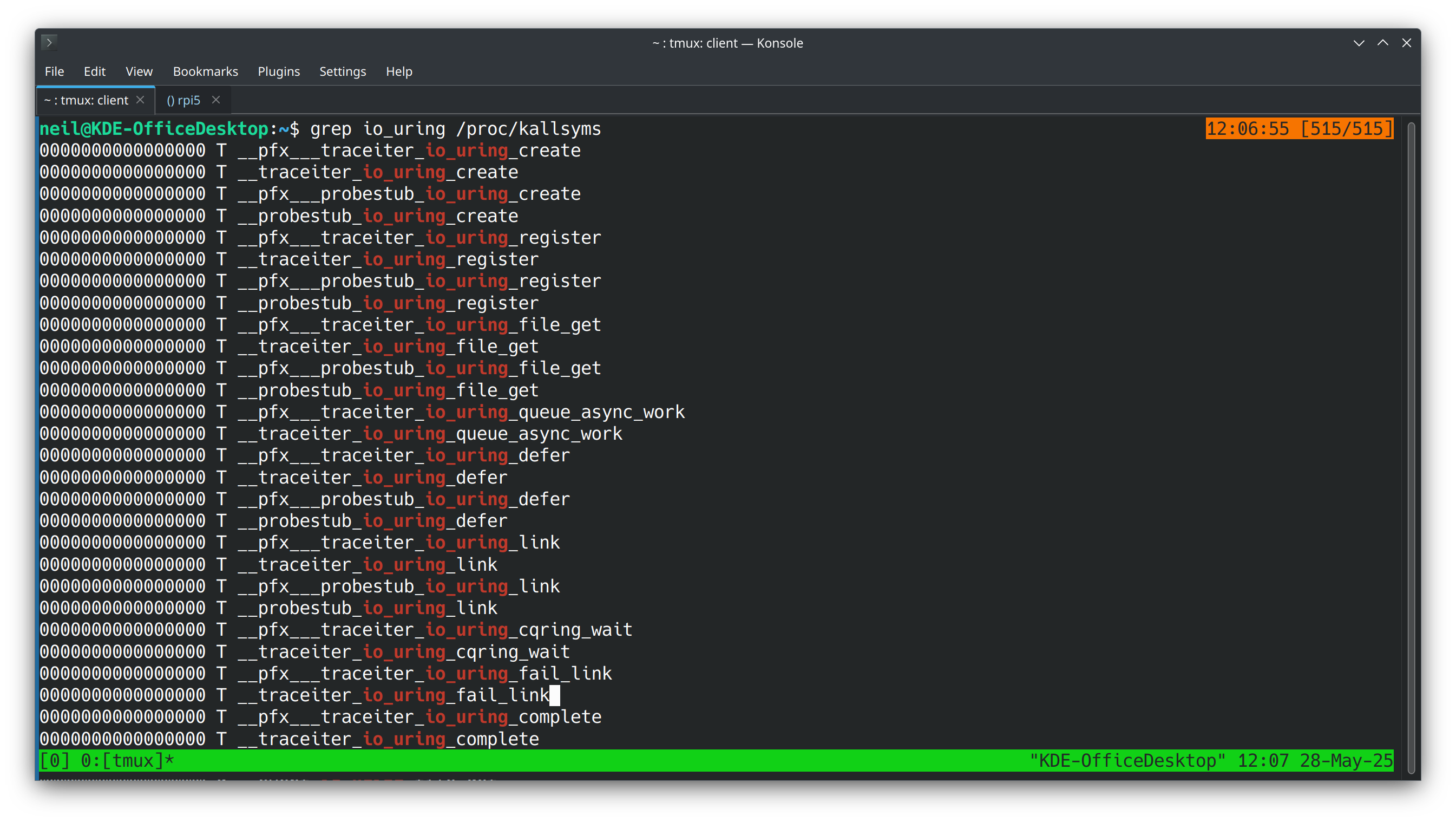

To check if your kernel implements it, run the following. If you see a lot of output featuring io_uring, you have it!

grep `io_uring` /proc/kallsyms

On an example machine, this produced the following output:

Checking at run time

In this snippet, we check for the availability of io_uring on the machine. Based on the output here, we can choose the mechanism we want to use for our data processing. If we have io_uring available then performance benefits are ours for the taking.

use io_uring::IoUring;

fn main() {

match IoUring::new(1) {

Ok(_) => println!("io_uring is supported!"),

Err(e) => println!("io_uring is NOT supported: {}", e),

}

}

What’s in it for us?

High volume processing in work such as ETL is a game of whack-a-mole with bottlenecks. High capacity multi-core parallel-processing workloads rely upon being fed data at a sufficient rate; without this, it’s squandered potential. io_uring can add significant contributions to throughput, providing data for the cores to consume.

For us data engineers, we know the world runs on Linux, and io_uring is available to us in our migration and data engineering projects.

References

[1] PostgreSQL v18 beta release notes: https://www.postgresql.org/about/news/postgresql-18-beta-1-released-3070/) [2] Jens Axboe’s profile https://en.wikipedia.org/wiki/Jens_Axboe