Whence

A client discussion about a proposed stack using NestJS coincided with an internal discussion about a larger-scale high-volume product. That product will remain anonymous for now, but we did have a large dataset of millions of JSON records that could be used for a test load.

There were some high-level tech overlaps (or “synergies” if you’re in marketing); high live-volumes were sought, and the records would be generated by large volumes of individuals as they interacted and hit an API.

In these scenarios, records aren’t batched for efficiency, but single postings to the server.

The great Unlogged

You may have read about unlogged tables in PostgreSQL - the table behaves in the same way as a normal DB table, but if the DB goes down - *pop* - the records are gone. Unlogged records never get to the WAL log so they don’t persist over to replicated servers. For simplicities sake, we’re considering an unlogged table as being analagous to a queue implementation of Valkey/Redis.

In a real implementation, we’d have a mechanism to draw chunks of records from the table and push them through processing into real, persisted tables; where the language could be anything that works (in a horizontally scaling context), but for this test it was a simple remit: What’s the performance difference between some basic vanilla stacks from various languages when hammering an API?

Push harder!

Good people like Bash. A quick script to decompress the files (hello zcat!) and POST them to the API:

# A snippet

zcat $FILES | pv -l -s $TOTAL_LINES | while IFS= read -r line; do

echo "$line" | curl -s -X POST -H "Content-Type: application/json" -d @- "$ENDPOINT" > /dev/null &

done

Well, Bash couldn’t keep up with the ingestion of the API’s and it was quickly evident that it was a bottleneck.

Push harderer!

You know what’s coming next; we rewrote the loading routine in Rust. Bottleneck breached.

Hmmm, that doesn’t internetty enough, as the internet does love aggressive language. I know, “Bottleneck CRUSHED”. That’ll do. Not nice, but it’ll do.

The APIs. 🐝

The original intention was just to test NestJS, but as this was a simple test, it wasn’t too time-consuming to rustle up some others.

Specifically:

- MVC Core with Entity Framework (.NET v8.0.117)

- NestJS with TypeOrm (NestJS v11)

- Rust with Tokio and sqlx (Rust 2021, Tokio v1)

- Java with Spring Boot (OpenJDK v21, Spring Boot 3.2.2)

- All versions had a client side connection pool.

All ran on a 2019 Kubuntu 24.04 desktop, Ryzen 3, 16GB Ram 1TB SSD.

The database was PostgreSQL v17 running on the local network on a Raspberry Pi5 with a 1TB SSD.

All software was compiled to “Release” mode, if available.

Compare

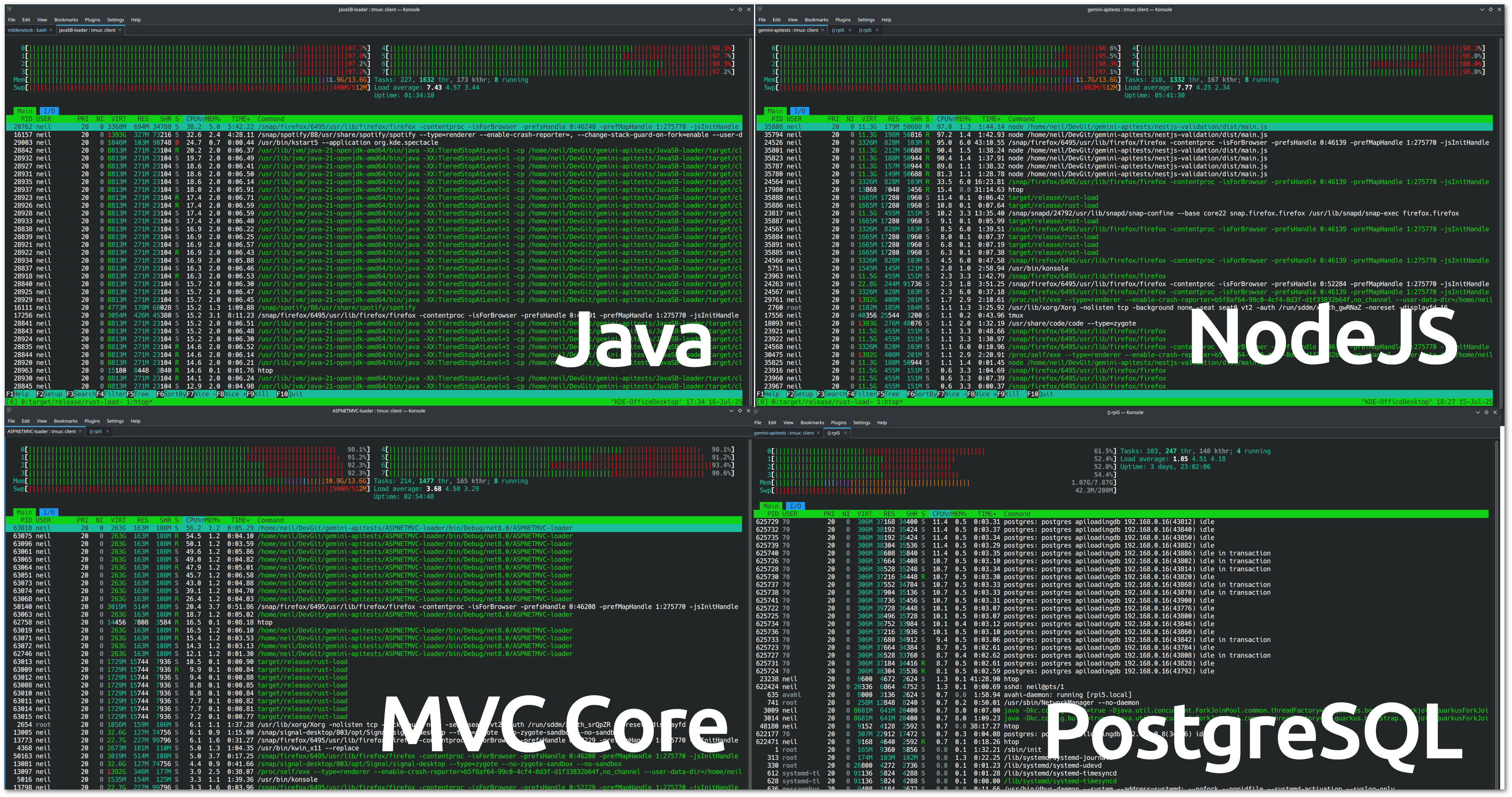

All of the languages (bar one), drove the client to it’s limits.

In this montage, see how PostgreSQL (bottom right) is was easily able to cope with the volume of data being pushed to it. Each language drove the client’s CPU to the limit on all cores.

“How the fans dir whirr and the electricity meter did spineth.”

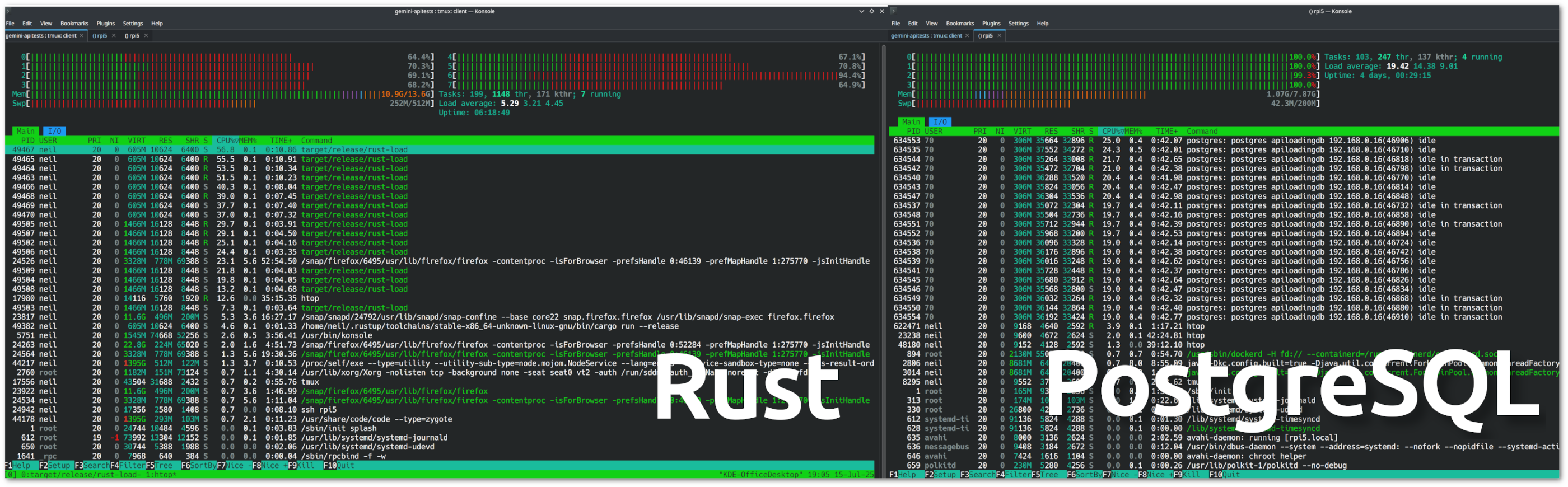

And contrast.

Rust flips the situation around (and this would be the same for C/C++). See that the client isn’t fully utilised; it’s having to wait for the database to catch up. The PostgreSQL server is running flat out on all cores.

In performance terms?

This is the money shot; the figures of records per second that each handled when imported 1.5 millions records individually through the API.

Rust, as expected, monsters the test with performance of 6.5x over NestJS.

Anything else?

Yes! Notice the loading speed degradation at around the 750,000 record mark.

This is most probably down to the lack of time the table has for vacuuming and updating statistics (the table has a primary key that gives it an implicit index). NestJS wasn’t able to drive the database loading quickly enough, so PostgreSQL remained unharmed.

back in the real world…

Loading single records into the database is pretty wasteful, so we’d have a time-based waterfall cascade proxy that aggregates the incoming messages when the rates are high and pushes them into PostgreSQL in batches. If the proxy endpoint wasn’t full after a pre-determined time (a few hundred millseconds, perhaps), then it would push the small volume into the DB anyway. Once a proxy endpoint reaches the number of pre-defined landed records, the next proxy connection would handle the incoming request and make its own aggregation et cetera.

Conclusion

Until the day that Cloud providers charge separately for electricity, perhaps tied to a green tarrif, most customers won’t care if their code’s written in custard. More resource will be added instead of efficiency.

Of course, futher considerations come into play, for example coding in NestJS is rather pleasant. For those familiar with other TypeScript systems such as InversifyJS and Angular, it’s reassuringly comfortable.

So perhaps use the more terse languages like Rust sparingly; just those areas that are blocking progress.